Nvidia is leading an AI Infrastructure boom. Cloud Czars, stuffed with cash, are buying equipment and software with both hands.

But at some point, they must get a return on these huge investments. Based on their present direction, they won’t get it.

Once it’s clear that they’ve gone the wrong way, the boom will end, and you’ll see a crash like the 2000 dot-bomb. The AI crash won’t kill the technology, any more than e-commerce ended 25 years ago.

But a lot of people are going to lose a lot of money.

AI Meet AI

The first point to make is that machines are better at talking to other machines than to people. This is the low-hanging fruit of AI, and it’s delicious.

I first wrote about this nearly 5 years ago. It’s the Machine Internet. Software can collect vast amounts of input and process it faster than any human. That makes it great for scheduling a hospital or managing a factory. It can even, if it has enough data, help direct a war.

To get accurate results, however, you need more than the Cloud. You need sensors on everything in the system you’re managing. You need to know where your people are, the condition of every machine they’re using, and some expectations of demand. Palantir doesn’t have that. Ukraine is not yet winning its war.

The Machine Internet leads to an Internet of Systems. With enough clients, water systems, electric systems, and traffic networks all become much more efficient. You can plug leaks, manage demand, and optimize the road system. But software is just one piece of it.

LLMs Be Stupid

The other problem facing the industry is that today’s Large Language Models (LLMs) are stupid.

This is especially obvious when the output is text. ChatGPT is a plagiarism machine. It collects, then tries to summarize, a firehose of data. It can make no intuitive leaps. Images, “film” clips, code snippets, and voice chats seem like magic, because they weren’t direct outputs before. That means we can’t properly evaluate their quality because there’s nothing to measure them against.

Text is different. It’s clear that LLMs aren’t making any intuitive leaps. They’re summarizing, like college kids who didn’t study before the final.

Here’s the best analogy I can come up with. AI is great for first-level customer support. It can answer the phone and direct simple queries. But when Karen demands to speak to the manager, you better have a manager ready. The AI can’t handle it.

Snake Oil Salesmen

There’s a third problem, and that’s the idiots directing the AI bubble.

Turns out they’re grifters. You can tell by how readily Elon Musk jumped on the bandwagon.

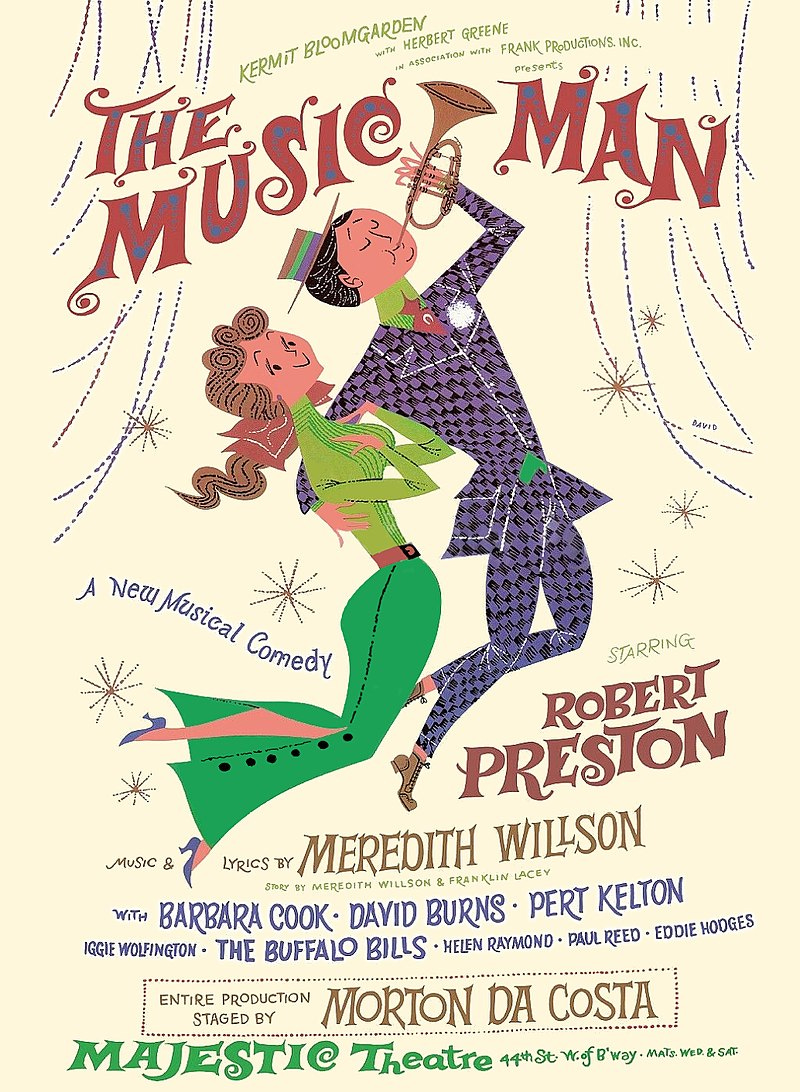

The people at the top of the stack are looking out for themselves, not the customers. This is the whole plot of The Music Man. Harold Hill says he can create bands from scratch. But what he’s selling are just musical instruments. He can’t read a note of music. It’s the music teacher, operating in the background, who provides the plot twist. She does the work he can’t.

Getting value from AI, in other words, is hard work. It’s not magic. It can only be done application by application, by people deeply embedded in the technology and putting in long hours. Salesmen sell. You need engineers to engineer things.

When will AI Be Found Out?

Probably when today’s computer “users” are told they must buy it.

That could happen as early as this fall when Amazon rolls out the next version of its Alexa chatbot. There are about 500 million Alexa users already. But take-up slowed to a trickle when its limitations became obvious. Now Amazon wants to make Alexa more of an Internet front-end. That’s the first step toward making it your

personal secretary, which is the goal.

But giving it these advanced capabilities is expensive. Amazon must get all that Nvidia money back somehow. The cost of the new subscription Alexa is still unknown. Chances are there will be lots of free trials and teaser rates. But at some point, probably around Christmas, 500 million customers are going to be told to pony up.

How many will do it? How much will they pay? Will there be a rush to buy more Alexa-enabled hardware? Or will a lot of Alexas wind up in landfills?

I don’t see the hype matching reality and, when that becomes obvious, today’s AI boom will bust.